Network monitoring for cheese?

Securing the dairy manufacturing process of the future

The Factory of the Future (FoF) concept is something beyond Industry 4.0. According to the Aalto Factory of the Future, the typical enabling technologies for FoF include “Artificial Intelligence, Industry 4.0 architecture, Industrial Internet of Things, wireless communication (5G, Wifi6), edge/fog/cloud computing paradigms, virtual integration, digital twins, remote commissioning, operation and predictive maintenance, human-robot collaboration, simulation, virtual and augmented reality” (source: https://www.aalto.fi/en/futurefactory). The FoF concept is evolving as traditional manufacturing companies adopt Industry 4.0 solutions during their digital transformation process. On one hand, this provides new opportunities to the companies, but on the other hand, it also introduces new kinds of risks and threats especially related to the implementation of more complex technology solutions. Increased complexity requires new skills related to, e.g., ICT, cybersecurity and systemic thinking, that are not easy to acquire. Nevertheless, companies must take this leap to the unknown, regardless of their current situation, just to keep up with the competition.

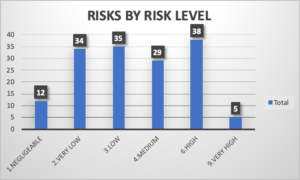

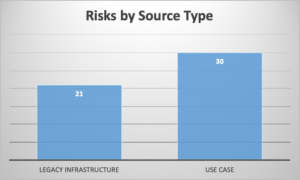

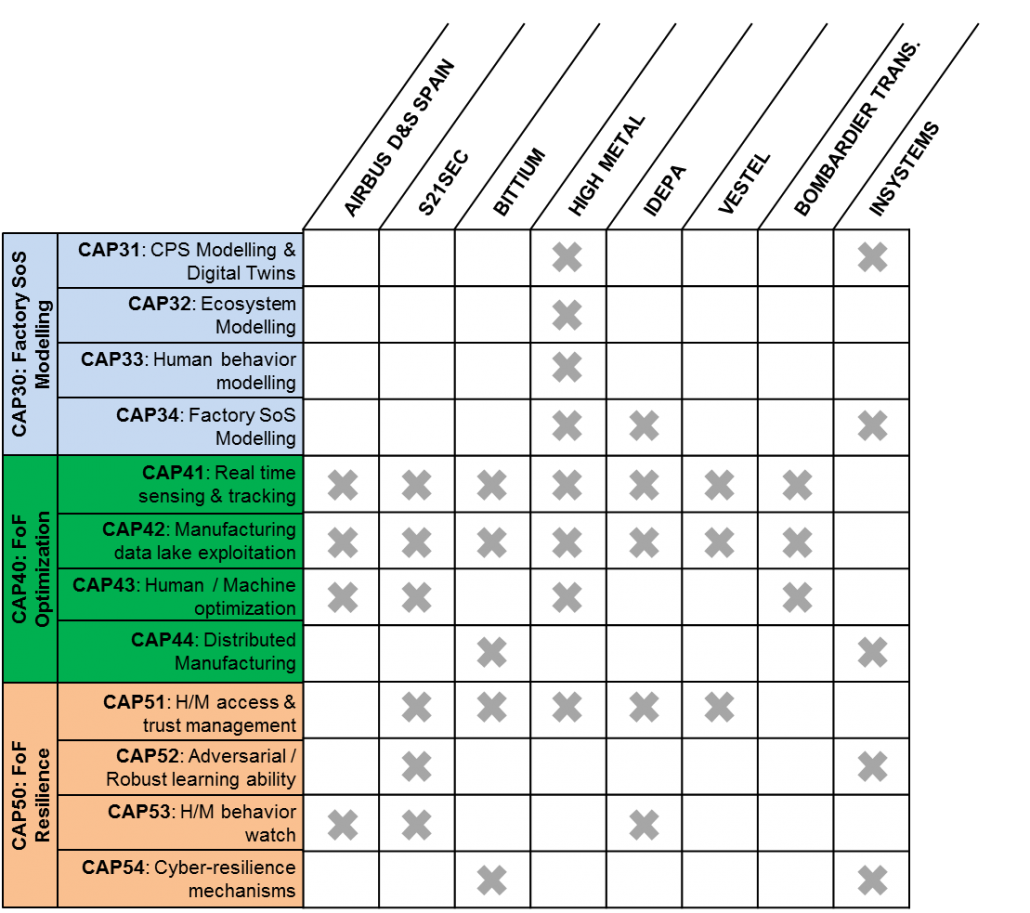

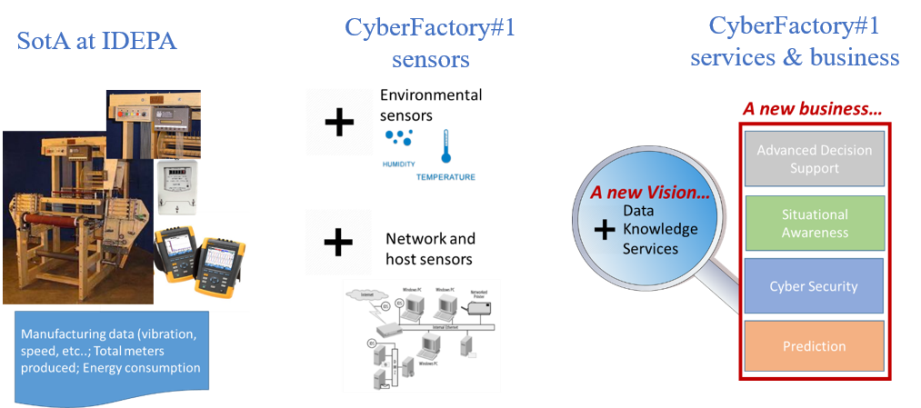

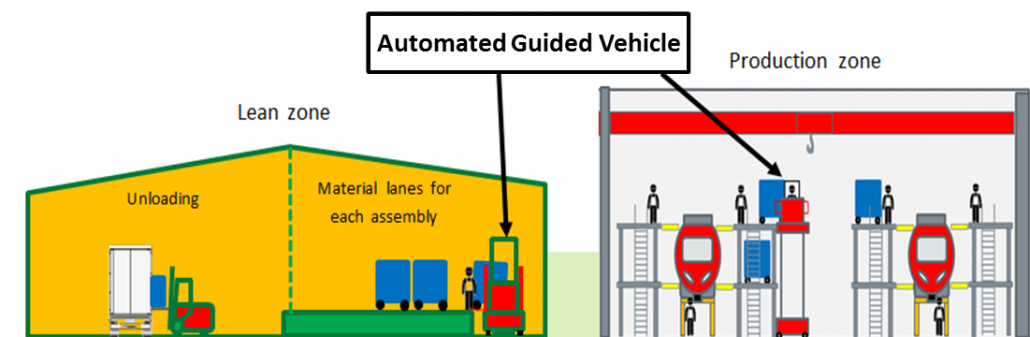

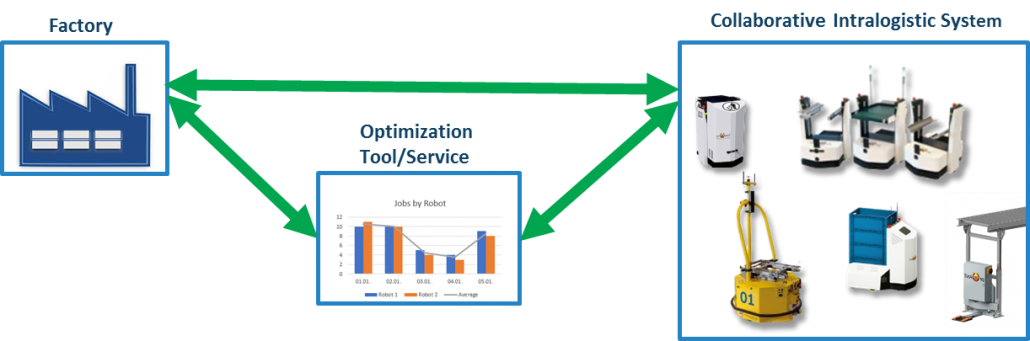

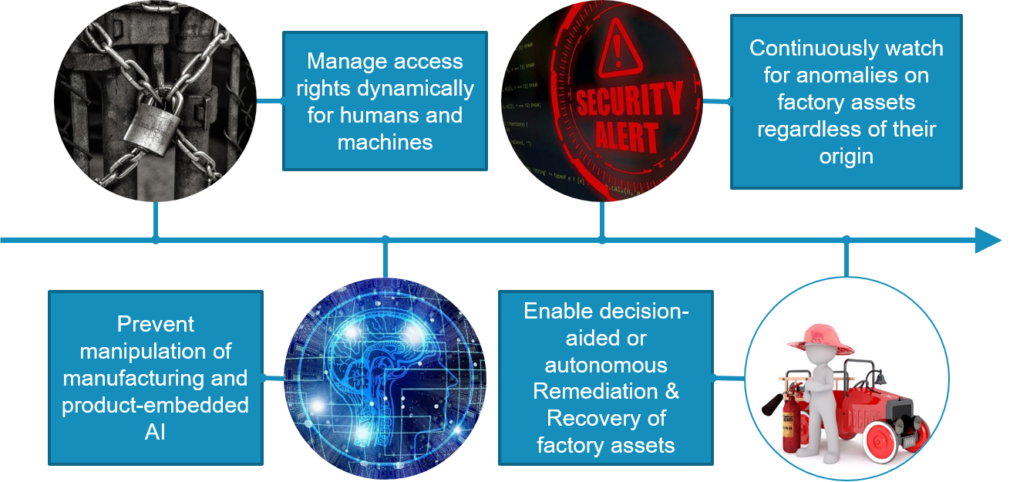

This article is a part of the CyberFactory#1 project with the focus on designing, developing, integrating and demonstrating a set of key enabling capabilities to foster optimization and resilience of the Factories of the Future (FoF). The project consists of 28 partners from seven countries, namely, Canada, Finland, France, Germany, Portugal, Spain, and Turkey. The work described here relates to the task “FoF resilience” located in the bottom right hand corner of the work package structure shown in the picture below. The task focuses on enabling the autonomous or decision-aided remediation and recovery of factory assets in the worst-case scenario, i.e., when an attack against the FoF or individual system within the factory is successful. The objective is to plan, model, simulate and practice the different ways for recovering the factory assets and selecting the most optimal way in terms of time and resources. This guarantees that when the worst-case scenario occurs, cybersecurity professionals can act immediately instead of losing valuable time while trying to figure out what to do in terms of attack mitigation and possible countermeasures.

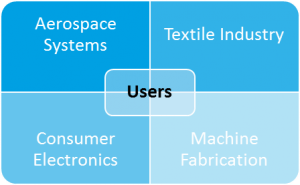

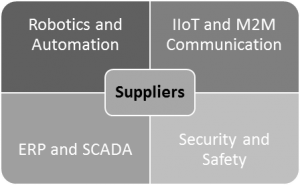

Figure 1. Structure of the FoF dynamic risk management and resilience work package

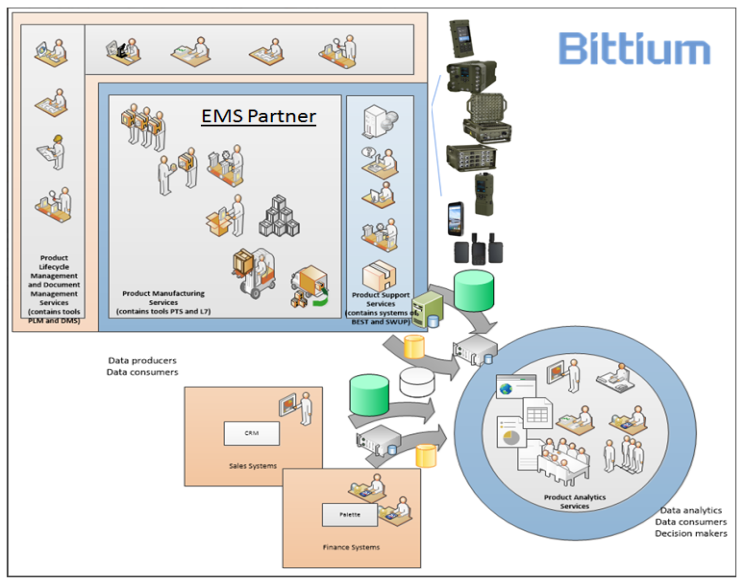

While the factory of the future provides great gains in efficiency as well as new capabilities for the manufacturer, the increased number of connections and increased networking requirements provide new possibilities for a rich collection of malicious cyber actors ranging from cyber criminals to competitors and even state actors. One of the project goals was to analyse and demonstrate the requirements for cybersecurity. The idea of demonstrating malicious activity within a cheese robot platform was initiated by the Finnish project partner High Metal (mkt-dairy.fi) and we decided to set it as our attack target.

The network monitoring system demonstrated in the video above was built using the Zeek network security monitor (https://zeek.org/) and the PreScope network visibility platform (https://ruggedtooling.com/solutions/prescope-visibility-platform/) provided by another Finnish project partner, Rugged Tooling. We combined the results with host logs using ELKstack (https://www.elastic.co/what-is/elk-stack) in order to make incident response more efficient. For demonstration implementation, we used the Airbus CyberRange (https://airbus-cyber-security.com/resource/cyberrange/), which is a cybersecurity research and innovation platform.

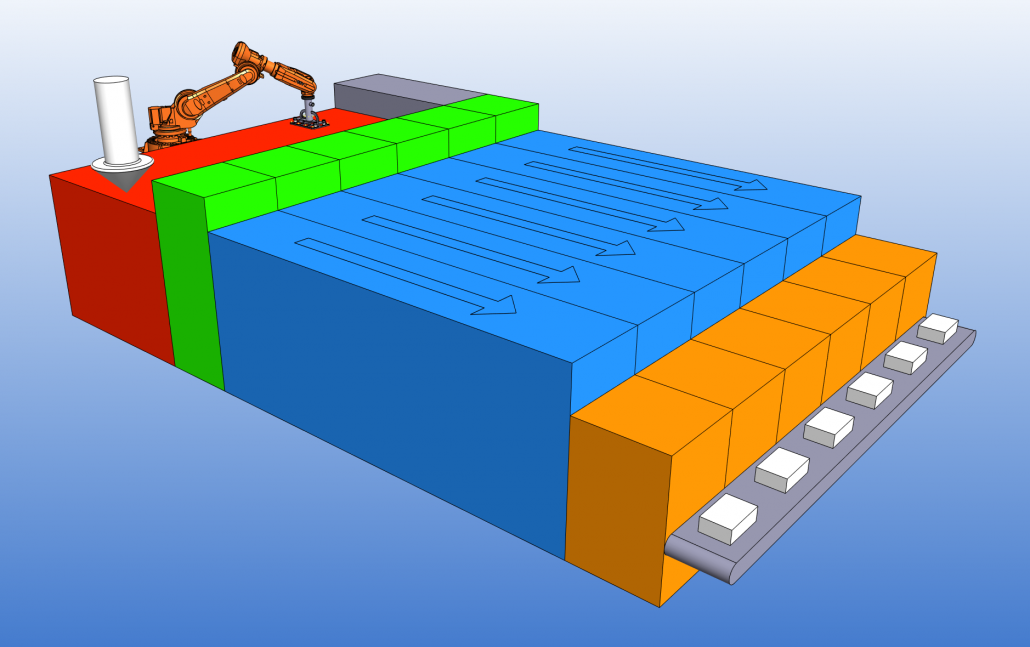

Figure 2. High Metal Cheese robot platform

While the current cheese robot technology seems to be safe and secure, meaning that there are no killer cheese robots overthrowing the humanity at least yet, a malicious attacker with administrator access to the configuration could modify the cheese making process in its critical areas, affecting the quality of the product. The potential amount of damaged goods would be enormous, if the spoiled cheese was detected only after the cheese maturing process is over. This is because it might affect weeks or even months of cheese production and perhaps even endangering business continuity. In an organisation with insufficient real-time quality control, potentially hazardous cheese might end up in the market and in the worst case endanger consumer health.

The demonstrator shows that implementing a simple network behaviour monitoring system, a network attack can be detected even before the attacker gains access to the cheese production configuration system. While such systems are not fool proof, the capabilities for automated detection will deter the majority of attackers.

Future development ideas

It is possible to apply the demonstrated network monitoring system to other critical infrastructure target systems, but we are especially interested in the safety of dairy manufacturing and other similar food production processes. Another future development idea is to use artificial intelligence (AI) or machine learning (ML) for the analysis of the data. As the data is already gathered into a database, the infrastructure already exists. This is aimed to lessen the work of human operators in detecting anomalous actions and lessening the cognitive load in monitoring the environment.

Acknowledgements

We wish to thank Lauri Nurminen from High Metal for providing the cheese production platform details for the demonstrator and helping us in defining the most critical threats, and Mikko Karjalainen from Rugged Tooling for providing and assisting with their PreScope product setup.

Authors

Mirko Sailio (research scientist), Jarno Salonen (senior scientist) and Markku Mikkola (senior scientist), VTT Technical Research Centre of Finland.

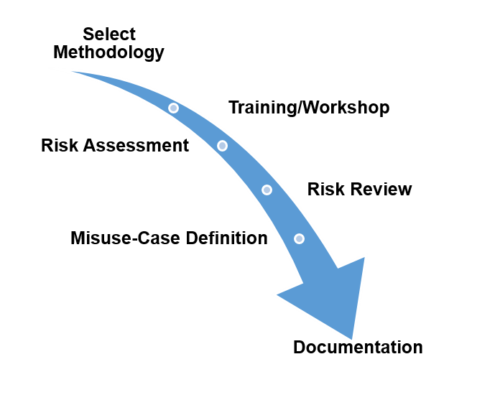

Figure 1: Misuse-Case Task Approach

Figure 1: Misuse-Case Task Approach